Tech

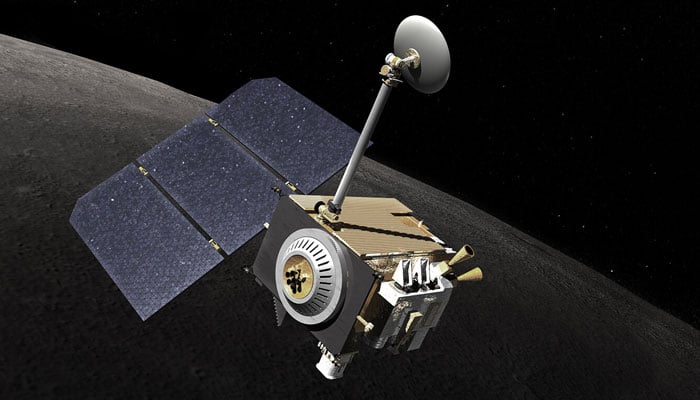

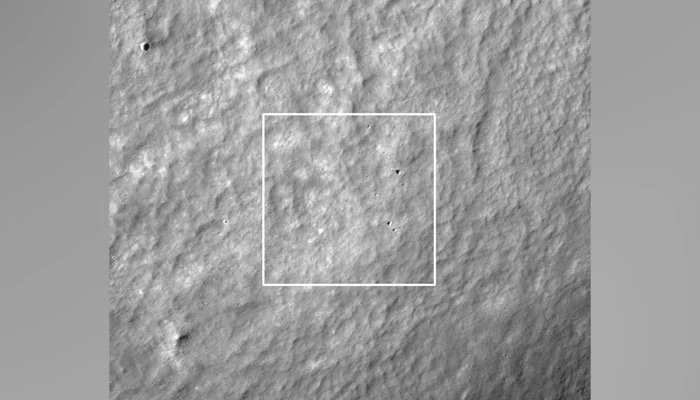

Nasa finds evidence of Japanese lunar lander’s crash on moon

Latest News

Punjab will provide fifty thousand solar kits.

Pakistan

There will be free WiFi in public parks.

Pakistan

FM Ishaq Dar praises IAEA for using nuclear technology in a “peaceful” manner

-

Latest News1 day ago

Latest News1 day agoTo discuss the judges’ letter, the IHC CJ calls for a full court meeting.

-

Latest News1 day ago

Latest News1 day agoNawaz Sharif departs for a five-day personal visit to China.

-

Latest News2 days ago

Latest News2 days agoPresident Raisi of Iran and FM Dar talk about bilateral relations.

-

Latest News7 hours ago

Latest News7 hours agoThe IHC defers making a ruling on cases involving the same complaint against Sheikh Rashid.

-

Latest News2 days ago

Latest News2 days agoHydrocarbon deposits discovered by Mari Petroleum in Daharki, Sindh

-

Business2 days ago

Business2 days agoOutsourcing: Investors from Turkey stop by the airport in Karachi

-

Latest News2 days ago

Latest News2 days agoIran Avenue is the new name of the highway in Islamabad, Pakistan.

-

Latest News2 days ago

Latest News2 days agoFor the Pakistani team’s T20I against New Zealand, Haseebullah has replaced Azam Khan.